The Moment the Agent Stalls

It works beautifully in the demo.

The AI agent reads a customer query, checks inventory, calculates pricing, drafts a response and routes an approval, all within seconds. The board nods. The roadmap accelerates. “Scale this,” someone says.

Then it hits production.

The agent cannot access live inventory because the ERP updates overnight. The CRM API rate-limits under load. Compliance requires manual approval gates. Latency creeps in. Edge cases multiply. What felt autonomous becomes brittle.

The issue is not intelligence.

It is architecture.

Agentic AI Is Not an Add-On. It Is an Architectural Stress Test.

Agentic AI changes the demands placed on enterprise systems.

Traditional enterprise software was designed around transactions and reports. Data flows were predictable. Processing could happen in batches. Human oversight filled the gaps between systems.

Agentic systems operate differently. They require continuous perception, reasoning and action. They depend on real-time state awareness and event-driven coordination. They assume data freshness and API reliability.

Most enterprise stacks were never designed for that.

The evidence is difficult to ignore. Multiple industry reports now estimate that roughly 90–95% of enterprise AI pilots fail to reach meaningful production impact. The failure is rarely due to model quality alone. It stems from integration friction, governance bottlenecks and infrastructural mismatch.

In other words: architectural debt surfaces instantly when autonomy is introduced.

What Breaks First

When organisations attempt to layer agents onto legacy systems, four assumptions typically collapse.

Batch Assumptions. Data warehouses update nightly. ETL jobs run on schedules. Agents, however, require up-to-the-minute state. When fraud models or logistics agents rely on yesterday’s snapshot, autonomy degrades into guesswork.

Siloed Ownership. In many enterprises, data lives in departmental silos governed by separate policies. Agents attempting cross-functional action expose these boundaries. Access requests become tickets. Tickets become delays. Intelligence stalls.

Synchronous Dependencies. Traditional microservices often depend on chained API calls. Under agent-driven load, those chains amplify latency. One service slows: the entire decision loop stalls.

Governance as Afterthought. AI governance is frequently layered on top of pilots rather than embedded in architecture. By month six, compliance reviews and audit requirements introduce manual checkpoints that negate autonomy.

None of these were fatal in the era of dashboards and reports. They are fatal in the era of decision loops.

The Illusion of Readiness

Many organisations believe they are AI-ready because they have cloud infrastructure, APIs and data lakes.

Yet McKinsey reports that while most firms have launched AI initiatives, only a small fraction consider themselves mature in embedding AI into workflows. Another industry survey found that around 42% of companies abandoned most AI initiatives each year, up sharply from previous cycles.

These are not capability failures. They are structural ones.

A pilot can be built inside a sandbox. It can use curated data. It can bypass complex security layers. It can avoid real-world load.

Production cannot.

Once an agent must operate across ERP, CRM, identity systems, compliance layers and customer channels, architectural seams become visible. And brittle seams do not support autonomy.

Why This Matters Now

In 2024 and 2025, experimentation was celebrated. Boards approved budgets for pilots. Teams demonstrated generative AI prototypes with enthusiasm.

By 2026, scrutiny has intensified.

CIOs are being asked not whether AI is innovative, but whether it is durable.

Agentic systems promise continuous decision-making: automated routing, adaptive optimisation, governed autonomy. But those promises rest on infrastructure capable of sustaining continuous loops.

If your architecture introduces seconds of delay across each hop, the cumulative effect can stretch into minutes. For customer-facing systems, that is unacceptable. For operational systems, it is destabilising.

The strategic implication is stark.

You cannot “bolt on” agentic AI to a batch-era stack.

You must redesign around it.

What an Agent-Ready Architecture Requires

Organisations that successfully move from pilot to production share several architectural traits.

They prioritise event-driven design. Systems publish state changes rather than waiting for queries. Agents subscribe to events rather than polling for updates.

They unify data semantically, not just physically. A single source of truth is less important than a single interpretation of state across systems.

They embed observability across data pipelines, model inference and agent actions. When something drifts, data quality, latency, model performance, it is detected immediately.

They treat governance as architecture, not process. Policy-as-code enforces constraints automatically rather than through manual review cycles.

And they assign end-to-end ownership. One accountable leader carries responsibility from prototype through live operation.

Without these foundations, agents become ornamental. They function in demos, not in reality.

The Human Consequence

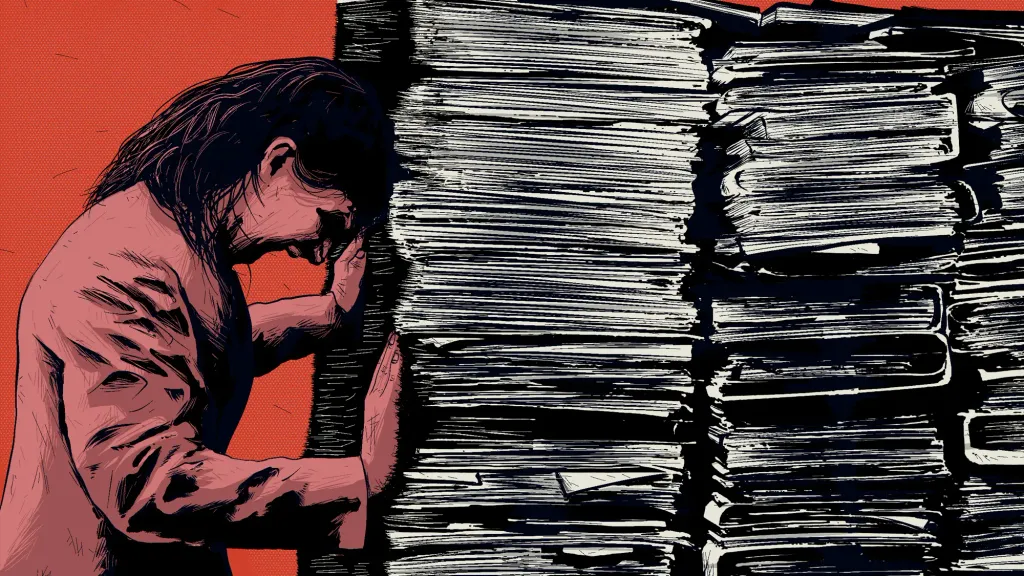

For architects and platform teams, this crisis is professional as much as technical.

You may find that systems you have maintained reliably for years now appear fragile under agentic load. That is not failure. It is exposure.

Agentic AI reveals what was previously masked by human coordination.

When managers manually reconcile discrepancies between systems, architectural seams remain hidden. When agents attempt to reconcile them automatically, inconsistency surfaces immediately.

In effect, agentic AI removes the human buffer that absorbed systemic inefficiency.

The result can feel uncomfortable.

But it is clarifying.

What Changes in Leadership Thinking

Boards and executives must adjust their mental model.

The question is no longer, “Which AI tool should we deploy?”

It is, “Is our architecture capable of supporting autonomous decision loops?”

That reframing alters investment priorities.

Budget shifts from isolated model experimentation to streaming platforms, integration layers, unified identity systems and observability tooling.

Transformation roadmaps include refactoring legacy systems before deploying agents.

Workforce planning includes reskilling middle managers and engineers into orchestration and oversight roles rather than layering AI on top of unchanged structures.

The maturity signal is not the number of AI demos delivered.

It is the number of AI systems operating reliably at scale.

The Way Forward

The architecture crisis is not a warning to slow down.

It is a call to build deliberately.

Agentic AI will not wait for perfect conditions. Competitive pressure ensures adoption continues. But organisations that treat architecture as foundational rather than optional will separate themselves quickly.

The playbook is pragmatic:

Start with one high-value use case embedded deeply in workflow. Refactor data flows to eliminate batch dependencies. Instrument observability before scaling autonomy. Embed governance into system design, not post-hoc review. Assign ownership across the full lifecycle.

In the first year, much of the work will feel like plumbing rather than innovation.

But plumbing determines pressure.

And pressure determines whether intelligence flows.

Short Summary

Agentic AI does not create architectural debt.

It exposes it.

The organisations that succeed will not be those who talk most confidently about autonomy.

They will be those who quietly rebuild the foundations beneath it.